Preface #

Good chance that if you’ve come across this article, it’s because you asked me what control theory was. This article exists because I just love control theory and people kept asking me about what it was. This is my definite response, a “What is control theory for non-engineers”.

What is Control Theory #

By Example #

Control theory is the field of controlling systems to do what you want them to do. If you think that description sounds vague and abstract, you’re absolutely right to thinks so because it is. Control theory is an abstract field that applies to wide variety of different things.

It’s a bit easier to explain with examples, but control theory is absolutely pervasive throughout many of what we consider high tech engineering today. Control theory is the central idea behind Boston Dynamics’ robots (No, they don’t use any Machine Learning!). Control theory is what lands SpaceX’s rocket boosters back on earth (And is central in rockets, satellites, etc in general). It’s what drives the self-driving cars that are being developed as we speak. It’s what is keeping our drones being stable or autonomously doing acrobatics.

It’s also what controls the power of your air conditioner or heater heating elements to maintain temperatures. Or what controls how much reactants are put into a chemical plant to maximise yield. Or the cruise control that keeps your car the right speed, robust against aerodynamic drag and adaptive to the incline your car is at.

By Theory #

I’m sure you get the point of what control theory can do. Now the question is how does that translate to the theory: How do we represent “systems”, how do we decide how to control them and how do we implement this?

We’ll start off with some system. You’ll notice that what all our examples so far have in common is that they can be modelled. They exist in a way such that they obey the laws of physics and hence we can come up with ways to describe how they work. When we talk about systems in control theory, we’re referring specifically to dynamical systems. I’m going to limit myself to continuous dynamical systems which are described by differential equations here, but the concepts transfer over to other types. A general continuous dynamical system is described by Where as in Newton’s calculus notation. That is to say the system has some state of being that changes over time on its own. You might recognise this as a general ordinary differential equation. Keep in mind that may be a vector.

More specifically, we have systems that can be controlled and hence depend not just on the state they’re in, but also some control input, . For example, your heating element changes temperature not only because it’s getting cooler due to its state (of high temperature), but also because of the voltage/current input that you give your heater.

Control theory revolves around the idea of choosing such that tends towards values we want it to have. The desired values might be as simple as an intended temperature set point in a heater, to as complex as a complicated trajectory for an entire leg to follow in a state-of-the-art robot.

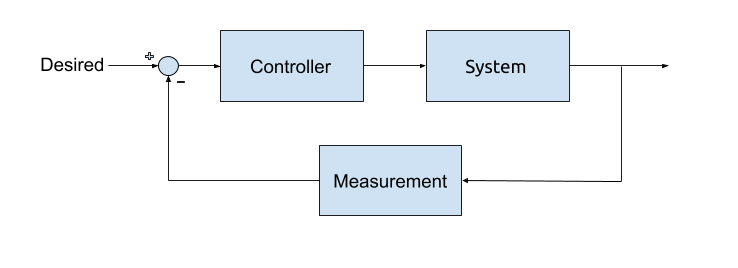

We do this through the idea of feedback control. You are probably informally familiar with the idea of feedback control to some extent. The idea is to have a continuously-occuring loop of measuring what the current state is, measuring how far you are off from your desired state, and then taking the appropriate action to improve your error.

Why Control theory is amazing #

There’s various reasons I love control theory and I think they can appeal to all sorts of people

Amazing results #

This reason is much more universal than the rest, and it’s why I started off this blog post by showing example photos and GIFs. It is awe-inspiring when you see a humanoid robot do a backflip or a drone jump through crazy small gaps or a massive rocket booster land on a barge in the middle of the ocean. Control theory lets us do some amazing tasks and take advantage of the agile and capable marvels of engineering that we’ve fine-tuned over decades. Control theory definitely provides a spectacle to see for many systems which makes it exciting for students of control and the general public alike.

Mathematically heavy #

I think the most common reason people get into Control theory is due to how mathematical it is. It’s one of the most mathematical fields of Engineering, enough so that it is often classified under a field of Applied Mathematics and is one of the only Engineering fields where proofs are so highly desired as they are.

It’s often split into “Classical control” and “Modern Control”, where Classical control draws from signal theory and analysis of linear dynamical systems using methods in the frequency domain (after Laplace or Fourier transforms), and Modern control is more broad and draws from everything from non-linear dynamics and chaos theory, to differential geometry.

Classical control theory is full of complex analysis and applied harmonic analysis applied to linear systems. This is where most people are generally introduced to control. What makes Classical control so fun is the many distinct layers of abstraction you build up. For example, a common method for designing controllers for linear systems that is taught in pretty much every introductory control theory courses is the Root-locus method. To understand this you have to: understand linear time-invariant system behaviour, understand how they can be described by convolutions, understand how convolutions can become multiplication in frequency domains, understand how transfer functions are created, how they can be described by poles and zeros, understand poles and zeros in feedback, understand how you can get the locus made out of open-loop poles. That is many layers of intuitive understanding you need to build and that can be very exciting for the more mathematically minded.

Modern control theory is a beast of its own with many subdisciplines that draw on many different mathematical fields with much more flexibility in theory and methods, and the added excitement of still being a research field. For sure, between the two, Control theory is an absolute blast for someone who likes systems described by differential equations and loves mathematics enough to invest into a field about abstractly solving problems on how to control them.

Interdisciplinarity #

Are you interested in computer science? Or mathematical optimization? Have you heard of dynamic programming? It’s a technique used in optimization and CS consisting of breaking up your problem into smaller problems that “overlap with each other” or consist of breaking it up into a recursive substructure that promises your solution is optimal if you have the optimal solution to a smaller problem. The field was kick-started by mathematician Richard Bellman who named the technique dynamic programming because his supervisor at RAND corp was scared of naming it something more mathematical.

Dynamic programming has natural connections to optimal control theory, the branch of control theory that deals with control in terms of an optimisation problem: Control this spacecraft to get to this point in a minimal amount of fuel, or get this robot here minimising the amount of torque used, etc.

While dynamic programming in general was created for work on discrete systems, you can extend the technique onto the continuous domain when you come up the Hamilton-Jacobi-Bellman PDE which both gives the solution of a continuous optimal control problem and acts as a principle of optimality you can confirm your answer against (assuming it satisfies some constraints)

This equation is not only a central equation in the theory of optimal control, but it can be considered a generalisation of the Hamilton-Jacobi reformulation of classical mechanics in Physics. In fact, much of Optimal Control theory can be considered generalised analytical mechanics, with its own Lagrangians and Hamiltonians related by Legendre transforms, minimising some functional.

This is one of my favourite parts of control theory. It, as a field within mechanical and electrical engineering, has the potential to go into a very wide variety of fields. This includes not just through varying the systems that we study, as we can obviously change our system to be one that models social phenomenon etc, but also vastly different changes in the theory. From mathematics and CS, we can get graph theoretic control solutions. From economics, game theoretic control. From Physics, analytical mechanics related calculus of variations style calculus.

Studying dynamics of systems #

This part isn’t strictly about control theory but the fields related to it. Control theory is all about studying how we can control dynamical systems with some given dynamics. As a result, control theory lends pretty heavily to studying the dynamics, or the behaviour, of specific systems. How to model them, their properties, when they are stable or not, if they are chaotic, etc. Studying the dynamics of these systems is a large field of its own and deserves it’s own post but control engineers are generally intimately familiar with it as the responsibility of modelling systems accurately falls on them and due to the shared theory.

It’s often just as fun analysing the aerodynamics and mechanics of a new aerial vehicle design with passive stability or a mechanical mechanism using propeller pitch control for better properties as it is designing methods to control the craft itself. Similarly, we often have systems that don’t have strict physics governing them (eg. the behaviour of electrical grids is highly dependent on the weather and how people use electricity) and studying the dynamics of the system can be a fun endeavour.