Portfolio #

Hello to the semi-secret portfolio site! This is where I show off some of the cool things I’ve worked on

Current research #

My current research involves combining imitation learning with traditional optimisation-based methods for motion planning and control using Diffusion Models as a bridge. Specifically, I’m interested in generative AI models like diffusion and flow matching, and:

- How optimal control can be used to improve policies learned via imitation learning

- How diffusion and flow matching can be used to improve sampling-based optimal control methods

- How we can use generative models to bridge between state-based optimal control and high dimensional observations like images

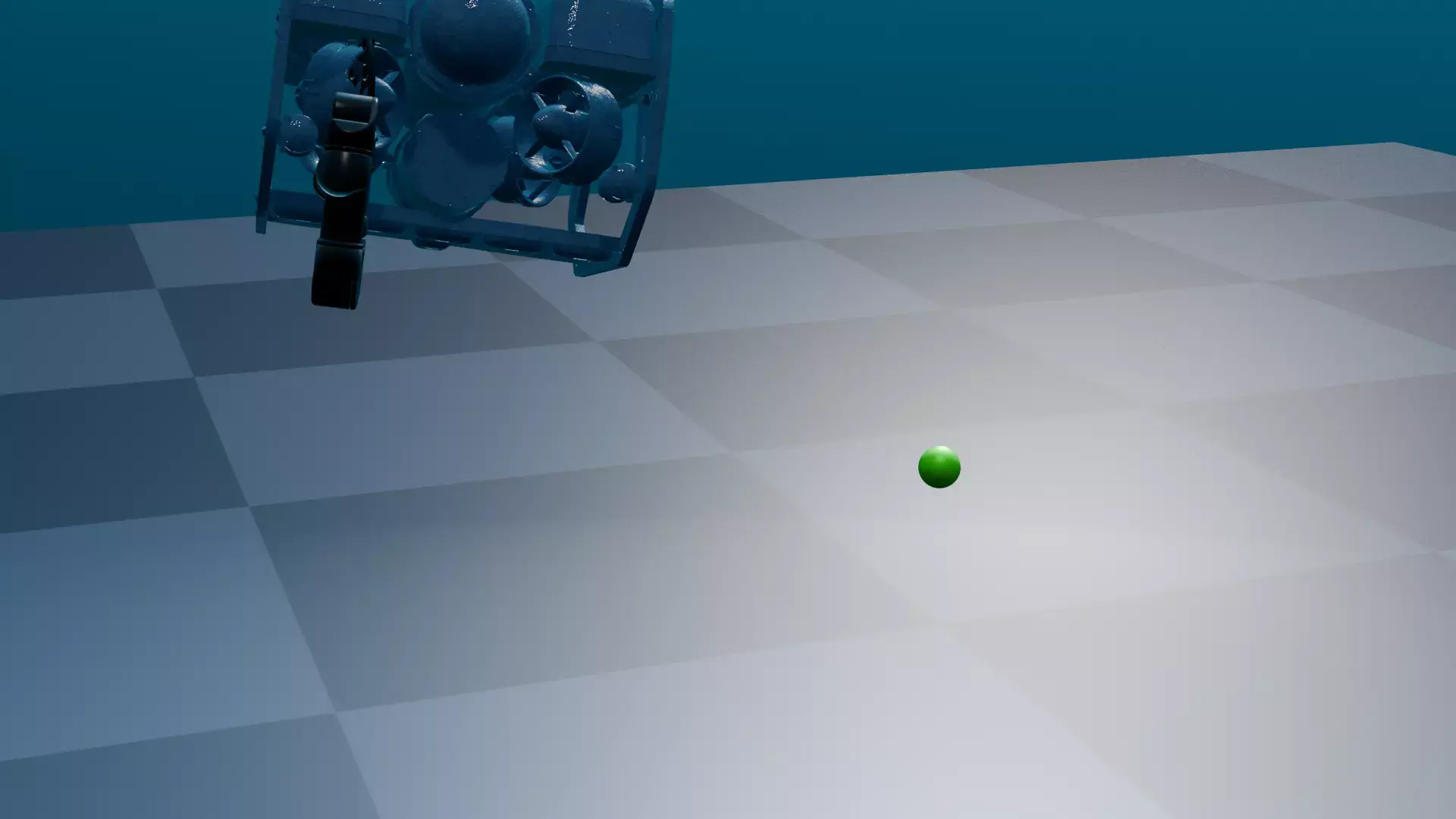

My application of interest is robotics in harsh environment, such as underwater robots that need to do, often simple, manipulation tasks but in very harsh environments with degraded perception and energetic disturbances.

Field Deployments #

I have been leading the charge on various aspects of field deployment as part of ARIAM Hub’s Capability Demonstrator. This means going out of the lab, in the field, collecting and deploying robots that don’t always do what you expect them to at sites not controlled by us.

My work has been spread across helping with our ROVs, Ground vehicle, and Spot.

I work on:

- controlling and commanding our robots

- ensuring their software stack is robust and reliable in the field

- ensuring they collect the data we expect and with the right fidelity and coverage for our applications

- logistics of deploying our robots (which are big and difficult to move) to sites (which are often hard to get into and operate in)

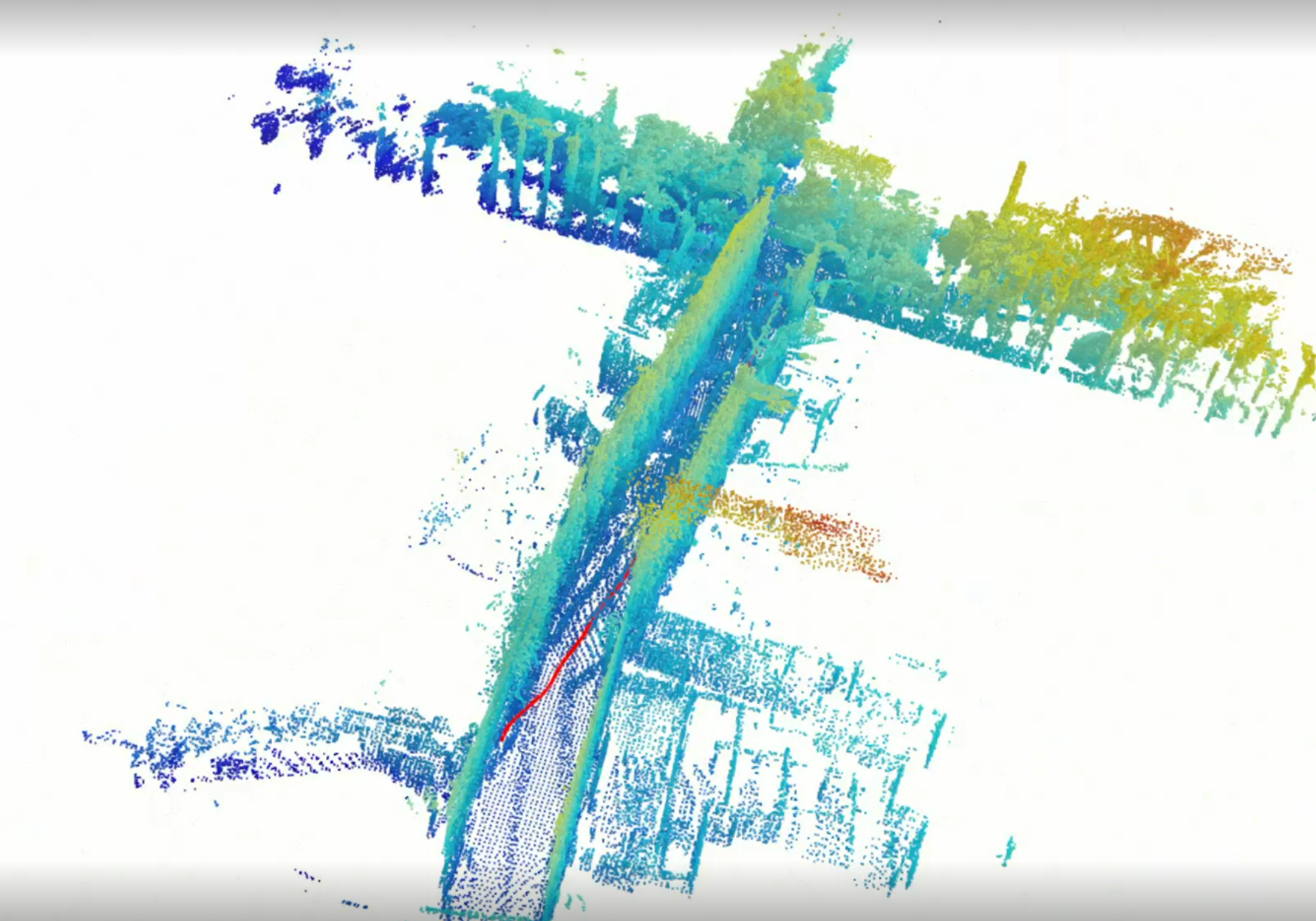

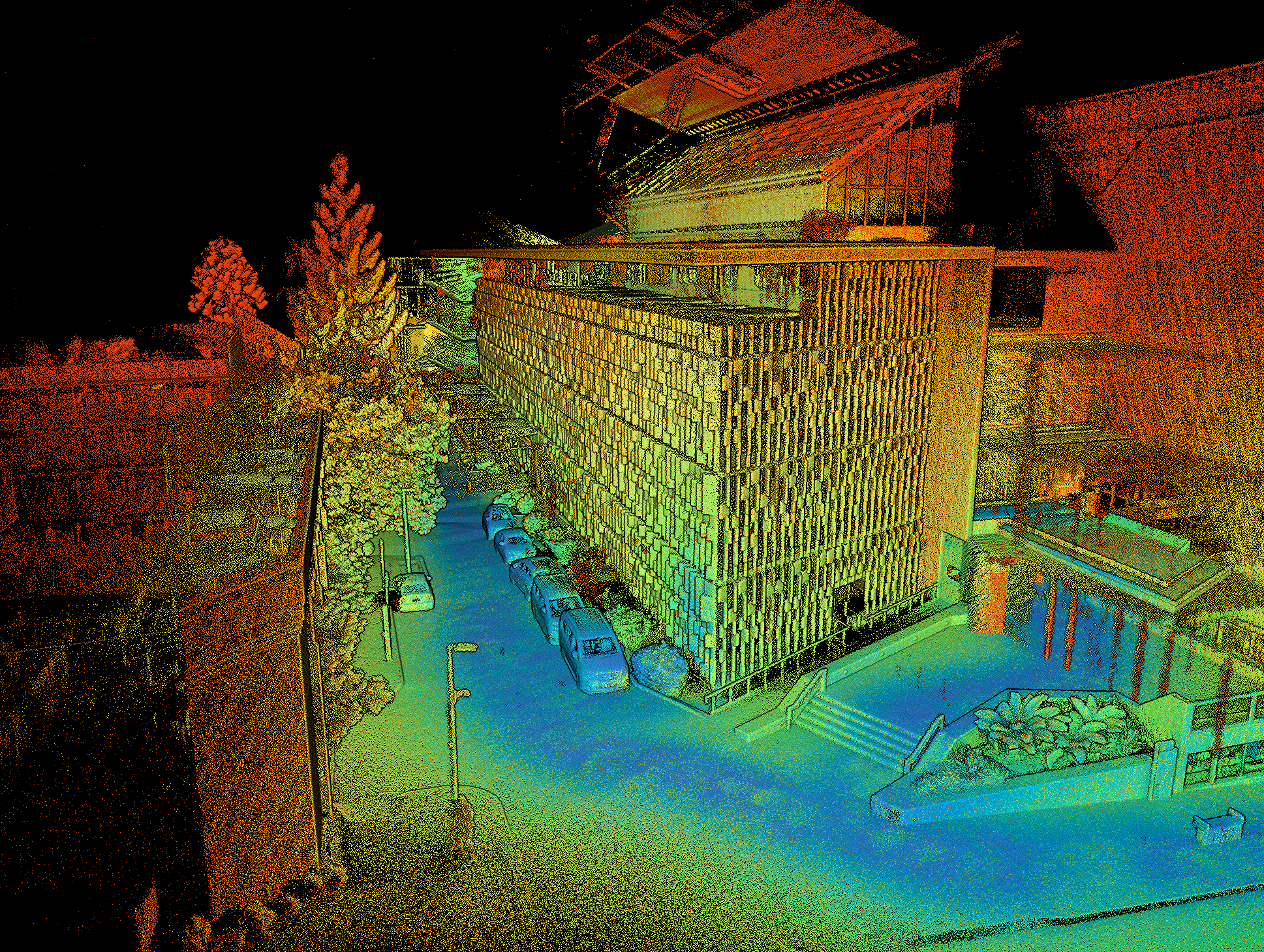

- processing our data through photogrammetry, LiDAR SLAM reconstruction, etc appropriate for developing digital twins

3D Reconstruction #

I’m also a big fan of using our 3D reconstructions to do useful work. The scans and reconstructions I’ve made have been used to:

- help design and plan ship repairs for the MV Cape Don, including creating composite patches to repair the ship hull

- create maps for use in operations research algorithms for planning long term dry dock operations

- create environments for robot simulations for accurate-to-life testing

Industry Experience #

Drone autonomy #

My role at my last company, Emesent, involved autonomous drones that operated in harsh, GPS-denied environments such as mine sites, caves, etc. Our drones created high resolution scans of environments with Wildcat SLAM, with clients using it with autonomous navigation (inclwaypoints, exploration and collision avoidance)

As part of my role I was involved in:

- Redesigning our entire GPU-accelerated perception system from scratch for improved performance, modularity, etc.

- Overhaul of our aging flight control system to improve robustness

- Creating the new task/behaviour management system which was needed to improve handling of complex sensing failures that can occur

- Making improvements to our path planner, working on some R&D projects, etc.

- Testing autonomy changes (I have a 25kg Multirotor Remote Pilot License!)

Spot Autonomy Integration #

Emesent was part of the CSIRO-Emesent DARPA SubT team! While my career timing wasnt lucky enough to directly work on the challenge, we did adopt parts of our SubT autonomy stack and start to integrate parts of it into the production versions of both the drone and Spot.

My work involved writing Spot driver and interface nodes, integrating its traversability and path planner it with the new task and behaviour planning systems that I worked on for the drones. The Spot also uses the same new and modular perception system I worked on for our drones! In the end, our system can run the same codebase to control both drones and the Spot, and be hot-swapped between them.

Space Autonomy Research #

A side project I have picked up during my PhD is some work with others in my centre on space autonomy, in particular reliable satellite operations and inspection. Due to the lack of atmosphere, satellites in space have extremely harsh reflections (direct sunlight, reflective surfaces), and deep shadows (no diffuse light).

We have been working on problems of designing trajectories for inspecting satellites with known visual models of the target satellite. Our approach involves integrating high quality renderers as visual models (such as Blender and Mitsuba3) along with our orbital dynamics simulation, and adding visual objectives to directly optimise orbital parameters and transfers.

Work accepted at i-SAIRAS 2024 and IAC 2025, and with more to come!

Other projects #

Gyroscope Inverted Pendulum Swing-up #

One of my university projects was to make a swingup and balancing controller for a pendulum, except unlike a traditional cartpole, the pendulum was coupled to a Gyroscope torquer. A flywheel spun at 400rpm in the inner axis, maintained by a PID controller, and tilting the flywheel induced gyroscopic torques in the pendulum axis which controlled the swingup and balancing.

The swing up happens through an energy-based Lyapunov controller, the states are estimated with an EKF, and the balancing happens with an LQR.